The Transformative Role of Large Language Models in Time Series Forecasting

Time Series Forecasting (TSF) has long been a cornerstone of data science, enabling businesses and researchers to predict future values based on historical data. Traditional methods, while effective, often struggle with the complexity and variability inherent in real-world data. The advent of Large Language Models (LLMs) has introduced a paradigm shift, offering unprecedented capabilities in capturing intricate patterns, handling unstructured data, and providing interpretable predictions. This article explores the innovative use of LLMs in TSF, drawing insights from recent studies and frameworks. However, as a data scientist, one might encounter several questions and challenges when integrating LLMs into TSF.

Capturing Complex Patterns

One of the most significant advantages of LLMs in TSF is their ability to capture complex patterns and dependencies in time series data. Unlike traditional models, which may overlook subtle relationships, LLMs can learn from vast amounts of historical data and identify intricate patterns that are challenging to detect. This capability is particularly beneficial in scenarios where time series data exhibits high variability and non-linearity.

- How can we ensure that the LLMs accurately capture all relevant patterns without overfitting to noise in the data?

- What techniques can be employed to validate the patterns identified by LLMs?

This capability is particularly beneficial in scenarios where time series data exhibits high variability and non-linearity.

Handling Unstructured Data

LLMs excel at handling unstructured data, such as text. This makes them particularly useful when dealing with time series data that contains textual information, such as news articles or social media sentiment. By transforming unstructured data into a format that LLMs can process, we can leverage their ability to understand and generate text, thereby enhancing the accuracy of forecasts.

- How can we effectively preprocess and integrate unstructured textual data with structured time series data?

- What are the best practices for ensuring that the textual data does not introduce bias or noise into the forecasting model?

Adaptability and Fine-Tuning

The adaptability of LLMs is another key advantage. They can be easily fine-tuned for specific forecasting tasks, allowing them to quickly learn from new data and adjust their predictions accordingly. This adaptability is crucial in dynamic environments where time series data is constantly evolving. Fine-tuning involves training the model on a specific forecasting task, enabling it to understand the context and semantics of the time series data.

- How can we efficiently fine-tune LLMs to adapt to rapidly changing data environments?

- What strategies can be used to ensure that the fine-tuned models remain robust and accurate over time?

Explaining Predictions

LLMs can provide explanations for their predictions, which is a significant advantage over traditional black-box models. By leveraging their ability to understand and generate text, LLMs can offer insights into the factors influencing their forecasts. This transparency is particularly valuable in decision-making processes, where understanding the rationale behind predictions is essential. A few questions on this part:

- How can we effectively integrate external knowledge into the input prompts without overwhelming the model?

- What are the best practices for paraphrasing input sequences to natural language to enhance model performance?

Techniques to Enhance Performance

Recent studies have proposed various techniques to enhance the performance of LLMs in TSF. One approach involves incorporating external human knowledge into input prompts. By providing LLMs with additional context and information, we can improve their ability to capture relevant patterns and dependencies. Another technique involves paraphrasing input sequences to natural language, which can substantially improve the performance of LLMs in time series forecasting2.

LLM-PS Framework

The LLM-PS framework represents a novel approach to enhancing time series forecasting using LLMs. LLM-PS leverages temporal patterns and semantics from time series data through a Multi-Scale Convolutional Neural Network (MSCNN) and a Time-to-Text (T2T) module. The MSCNN captures both short-term fluctuations and long-term trends, while the T2T module extracts valuable semantics. Experimental results demonstrate that LLM-PS achieves state-of-the-art performance in various forecasting tasks, including short-term, long-term, few-shot, and zero-shot scenarios. However, questions and challenges include:

- What are the potential limitations of the LLM-PS framework, and how can they be addressed?

- How can we optimize the LLM-PS framework to handle different types of time series data effectively?

Conclusion

The integration of LLMs into time series forecasting represents a significant advancement in the field of data science. Their ability to capture complex patterns, handle unstructured data, and provide interpretable predictions makes them a powerful tool for forecasting tasks. As research in this area continues to evolve, we can expect further innovations and improvements in the performance of LLMs in TSF. By leveraging the insights and techniques discussed in this article, data scientists can harness the full potential of LLMs to drive more accurate and interpretable forecasts. I hope to be able to find answers and solutions for the above questions. At this moment te application of LLM’s to support or improve the results of TSF is an interesting field to research.

Literature

Braei, M., & Wagner, S. (2020). Anomaly detection in univariate time-series: A survey on the state-of-the-art. Conference Paper.

Chang, C., Wang, W.-Y., Peng, W.-C., & Chen, T.-F. (2025). LLM4T5: Aligning Pre-Trained LLMs as Data-Efficient Time-Series Forecasters. Article.

Chen, C., Oliveira, G., Noghabi, H. S., & Sylvain, T. (2024). LLM-TS Integrator: Integrating LLM for enhanced time series modeling. arXiv preprint arXiv:2410.16489.

Cheng, M., Liu, Q., Liu, Z., Zhang, H., Zhang, R., & Chen, E. (2023). TimeMAE: Self-supervised representations of time series with decoupled masked autoencoders. arXiv preprint arXiv:2303.00320.

Chung, H., Kim, J., Kwon, J.-m., Jeon, K.-H., Lee, M. S., & Choi, E. (2023). Text-to-ECG: 12-lead electrocardiogram synthesis conditioned on clinical text reports. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1-5. IEEE.

Cleveland, R. B., Cleveland, W. S., McRae, J. E., & Terpenning, I. (1990). STL: A seasonal-trend decomposition. Journal of Official Statistics, 6(1), 3-73.

Cleveland, R. B., Cleveland, W. S., McRae, J. E., & Terpenning, I. (1990). STL: A Seasonal-Trend Decomposition Procedure Based on Loess. Journal of Official Statistics, 6(1).

Cochran, W. T., Cooley, J. W., Favin, D. L., Helms, H. D., Kaenel, R. A., Lang, W. W., Maling, G. C., Nelson, D. E., Rader, C. M., & Welch, P. D. (1967). What is the fast Fourier transform? Proceedings of the IEEE, 55(10), 1664-1674.

Das, A., Kong, W., Leach, A., Sen, R., & Yu, R. (2023). Long-term forecasting with TiDE: Time-series dense encoder. arXiv preprint arXiv:2304.08424.

Défossez, A., Copet, J., Synnaeve, G., & Adi, Y. (2023). High fidelity neural audio compression. Transactions on Machine Learning Research (TMLR).

Devlin, J., Chang, M., Lee, K., & Toutanova, K. (2018). BERT: Pre-training of deep bidirectional transformers for language understanding. CoRR, abs/1810.04805.

Gao, S.-H., Cheng, M.-M., Zhao, K., Zhang, X.-Y., Yang, M.-H., & Torr, P. (2019). Res2Net: A new multi-scale backbone architecture. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 43(2), 652-662.

Gardner, E. (2006). Exponential smoothing: The state of the art—Part II. International Journal of Forecasting, 22(4), 637-666.

Gu, A., Goel, K., & Ré, C. (2022). Efficiently modeling long sequences with structured state spaces. In International Conference on Learning Representations (ICLR).

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770-778.

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9(8), 1735-1780.

Hsu, W.-N., Bolte, B., Tsai, Y.-H. H., Lakhotia, K., Salakhutdinov, R., & Mohamed, A. (2021). Hubert: Self-supervised speech representation learning by masked prediction of hidden units. IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLR), 29, 3451-3460.

Hu, E. J., Shen, Y., Wallis, P., Allen-Zhu, Z., Li, Y., Wang, S., Wang, L., & Chen, W. (2021). LoRA: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

Hyndman, R. (2018). Forecasting: principles and practice. OTexts.

Jin, M., Wang, S., Ma, L., Chu, Z., Zhang, J., Shi, X., Chen, P.-Y., Liang, Y., Li, Y.-f., Pan, S., et al. (2024). Time-LLM: Time series forecasting by reprogramming large language models. In International Conference on Learning Representations (ICLR).

Kowsher, M., Sobuj, M. S. I., Prottasha, N. J., Alanis, E. A., Garibay, O. O., & Yousefi, N. (2024). LLM-Mixer: Multiscale mixing in LLMs for time series forecasting. arXiv preprint arXiv:2410.11674.

Ledro, C., Nosella, A., & Vinelli, A. (2022). Artificial intelligence in customer relationship management: Literature review and future research directions. Journal of Business & Industrial Marketing.

Liu, P., Guo, H., Dai, T., Li, N., Bao, J., Ren, X., Jiang, Y., & Xia, S.-T. (2024a). CALF: Aligning LLMs for time series forecasting via cross-modal fine-tuning. arXiv preprint arXiv:2403.07300.

Liu, Y., Hu, T., Zhang, H., Wu, H., Wang, S., Ma, L., & Long, M. (2024b). iTransformer: Inverted transformers are effective for time series forecasting. International Conference on Learning Representations (ICLR).

Long, J. (2023). Large Language Model Guided Tree-of-Thought. Cornell University, Computer Science > Artificial Intelligence. https://doi.org/10.48550/arXiv.2305.08291

Makridakis, S., Spiliotis, E., & Assimakopoulos, V. (2018). The M4 competition: Results, findings, conclusion and way forward. International Journal of Forecasting (IJF), 34(4), 802-808.

Moody, G. B., & Mark, R. G. (2001). The impact of the MIT-BIH arrhythmia database. IEEE Engineering in Medicine and Biology Magazine (IEMBM), 20(3), 45-50.

Motsi, S. T. (n.d.). Leveraging language models for time series forecasting: Unlocking the power of LLMs. Medium. Geraadpleegd op 2 december 2024, van https://medium.com/@simbatmotsi/leveraging-language-models-for-time-series-forecasting-unlocking-the-power-of-llms-269dac965b10

Nie, Y., Nguyen, H., Sinthong, P., & Kalagnanam, J. (2023). A time series is worth 64 words: Long-term forecasting with transformers. In International Conference on Learning Representations (ICLR).

Oreshkin, B. N., Carpov, D., Chapados, N., & Bengio, Y. (2019a). N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. arXiv preprint arXiv:1905.10437.

Oreshkin, B. N., Carpov, D., Chapados, N., & Bengio, Y. (2019b). N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. International Conference on Learning Representations (ICLR).

Sel, B., Tawaha, A. A., Khattar, V., Wang, L., Jia, R., & Jin, M. (2023). Algorithm of Thoughts: Enhancing Exploration of Ideas in Large Language Models. Cornell University, Computer Science > Computation and Language. https://doi.org/10.48550/arXiv.2308.10379

Shah, R. S., Marupudi, V., Koenen, R., & Varma, S. (2023). Numeric Magnitude Comparison Effects in Large Language Models. Conference Paper.

Talaviya, A. (2023, July 27). Building Generative AI Applications with LangChain and OpenAI API. Analytics Vidhya. https://www.analyticsvidhya.com/blog/2023/07/generative-ai-applications-using-langchain-and-openai-api/

Tang, H., Zhang, C., Jin, M., & Du, M. (2025). Time Series Forecasting with LLMs: Understanding and Enhancing Model Capabilities. ACM SIGKDD Explorations Newsletter.

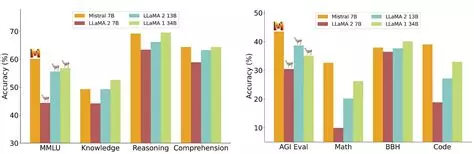

Tang, H., Zhang, C., Jin, M., Yu, Q., Wang, Z., Jin, X., Zhang, Y., & Du, M. (2024, februari). Time series forecasting with LLMs: Understanding and enhancing model capabilities. Rutgers University, Shanghai Jiaotong University, University of Liverpool, New Jersey Institute of Technology, Xi’an Jiaotong-Liverpool University.

Tang, J., Chen, S., Gong, C., Zhang, J., & Tao, D. (n.d.). LLM-PS: Empowering large language models for time series forecasting with temporal patterns and semantics.

Taylor, P. (2023, August 22). Amount of data created, consumed, and stored 2010-2020, with forecasts to 2025. Statista. https://www.statista.com/statistics/871513/worldwide-data-created/#statisticContainer

Thudumu, S., Branch, P., Jin, J., & Singh, J. (2020). A comprehensive survey of anomaly detection techniques for high dimensional big data. Journal of Big Data, 7(42). https://doi.org/10.1186/s40537-020-00320-x

Waleed, H., Gadsden, A. S., & Yawney, J. (2022). Financial Fraud: A Review of Anomaly Detection Techniques and Recent Advances. Expert Systems with Applications, 193, 116429. https://doi.org/10.1016/j.eswa.2021.116429

Wei, J., Wang, X., Schuurmans, D., Bosma, M., Ichter, B., Xia, F., Chi, E. H., Le, Q. V., & Zhou, D. (2022). Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. Part of Advances in Neural Information Processing Systems 35 (NeurIPS 2022) Main Conference Track. https://doi.org/10.48550/arXiv.2305.15778

Xue, H., & Salim, F. D. (2023). PromptCast: A New Prompt-Based Learning Paradigm for Time Series Forecasting. Article.

Yinfang, C., Huaibing, X., Minghua, M., Kang, Y., Xin, G., Liu, S., Yunjie, C., Xuedong, G., Hao, F., Ming, W., Jun, Z., Supriyo, G., Xuchao, Z., Chaoyun, Z., Qingwei, L., Saravan, R., & Dongmei, Z. (2023). Empowering Practical Root Cause Analysis by Large Language Models for Cloud Incidents. Cornell University, Software Engineering. https://doi.org/10.48550/arXiv.2305.15778

Zhou, D., Scharli, N., Hou, L., Wei, J., Scales, N., Wang, X., Schuurmans, D., Cui, C., Bousquet, O., Le, Q., & Chi, E. (2023). Least-to-Most Prompting Enables Complex Reasoning in Large Language Models. Cornell University, Computer Science > Artificial Intelligence. https://doi.org/10.48550/arXiv.2205.10625